Pay-by-bag works for most communities, but not Hopkinton

Every time Jolene Cochrane clocks in for her shift at the Hopkinton Transfer Station, she sees residents driving in with their household waste bagged in various colored trash bags.With 19 years of experience at the station — a few years longer than...

Pembroke seminar on climate change aims to set the record straight

Ayn Whytemare doesn’t like the view from the top of her hayfield in Pembroke.From there, rising from pines like a lonely skyscraper, stands the smokestack used by the Bow coal plant, the last facility of its kind in New England. And while the visible...

Most Read

Regal Theater in Concord is closing Thursday

Regal Theater in Concord is closing Thursday

Former Franklin High assistant principal Bill Athanas is making a gift to his former school

Former Franklin High assistant principal Bill Athanas is making a gift to his former school

Another Chipotle coming to Concord

Another Chipotle coming to Concord

Vandals key cars outside NHGOP event at Concord High; attendee carrying gun draws heat from school board

Vandals key cars outside NHGOP event at Concord High; attendee carrying gun draws heat from school board

Phenix Hall, Christ the King food pantry, rail trail on Concord planning board’s agenda

Phenix Hall, Christ the King food pantry, rail trail on Concord planning board’s agenda

On the Trail: New congressional candidate spotlights border, inflation, overseas conflicts

On the Trail: New congressional candidate spotlights border, inflation, overseas conflicts

Editors Picks

Concord martial arts studio builds life skills far beyond combat

Concord martial arts studio builds life skills far beyond combat

Red barn on Warner Road near Concord/Hopkinton line to be preserved

Red barn on Warner Road near Concord/Hopkinton line to be preserved

Hometown Hero: Quilters, sewers grateful for couple continuing ‘treasured’ business

Hometown Hero: Quilters, sewers grateful for couple continuing ‘treasured’ business

Searchable Concord salary database: Top earners include more police, fewer women

Searchable Concord salary database: Top earners include more police, fewer women

Sports

Boys’ lacrosse: With a different level of energy and focus, MV feels primed for success

PENACOOK — Merrimack Valley head coach Sean Gill knew something was different about his team this year when 15 of his 25 players wanted to interview to be a team captain.Only four were ultimately chosen, but so far, Gill’s had a whole sideline he can...

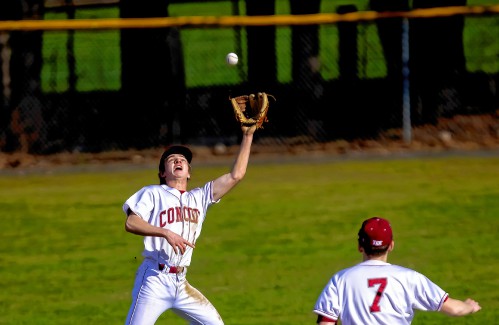

Baseball: Concord makes eight errors but shows reasons for optimism in wild extra-inning loss

Baseball: Concord makes eight errors but shows reasons for optimism in wild extra-inning loss

High schools: Monday’s baseball, softball, lacrosse and tennis results

High schools: Monday’s baseball, softball, lacrosse and tennis results

Obiri, Lemma claim Boston Marathon

Obiri, Lemma claim Boston Marathon

High schools: Concord boys’ lax earns first win, plus more weekend results

High schools: Concord boys’ lax earns first win, plus more weekend results

Opinion

Opinion: Proposed height zoning change for Concord’s Main Street

Steve Duprey is the owner of the Duprey Companies The proposed zoning change to allow a 90-foot height building on Main Street, in appropriate cases, where the current zoning limits the height to 80 feet, makes sense and should be adopted. First, a...

Opinion: How our twin toddlers turned our lives (and chairs) upside down

Opinion: How our twin toddlers turned our lives (and chairs) upside down

Opinion: New Hampshire, it’s time to drive into the future

Opinion: New Hampshire, it’s time to drive into the future

Politics

Sununu says he’ll support Trump even if he’s convicted

As jury selection begins this week in the hush-money trial of former President Donald Trump, New Hampshire Gov. Chris Sununu says he doesn’t believe many voters view Trump’s criminal indictments, his actions on Jan. 6, 2021, or his election denialism...

NH mayors want more help from state on homelessness prevention funds

NH mayors want more help from state on homelessness prevention funds

Two democrats with parallel views run for same State Senate seat

Two democrats with parallel views run for same State Senate seat

House passes bill removing exceptions to state voter ID law

House passes bill removing exceptions to state voter ID law

League of Women Voters suing over AI robocalls sent in NH

League of Women Voters suing over AI robocalls sent in NH

Arts & Life

NH Furniture Masters present new member show this spring

The NH Furniture Masters are pleased to feature the work of three new members in our spring exhibition: Dan Faia, Mike Korsak, and Philip Morley. This exhibit celebrates the creativity and dedication of our newest members and brings together a diverse...

Vintage Views: The greatest factory that never was

Vintage Views: The greatest factory that never was

Inspired by Robert Frost, New Hampshire Poet Laureate Jennifer Militello has achieved her childhood dreams

Inspired by Robert Frost, New Hampshire Poet Laureate Jennifer Militello has achieved her childhood dreams

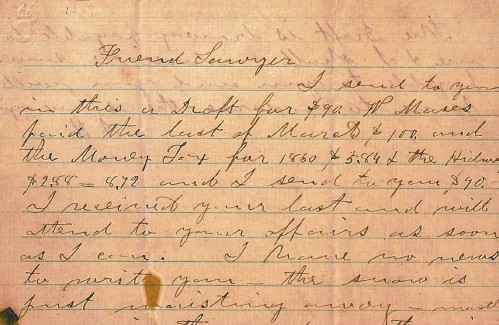

From the archives: Civil War brewing

From the archives: Civil War brewing

Obituaries

Jane A. Haskell

Jane A. Haskell

Boscawen , NH - Jane A. Haskell, 95, died peacefully on Saturday April 13, 2024, at Merrimack County Nursing Home. She was born in Dover, NH, the daughter of Amy (Towle) and Harold Brow... remainder of obit for Jane A. Haskell

Patricia L. Hews

Patricia L. Hews

Concord, NH - Patricia L. Hews passed away peacefully on April 3, 2024. "Pat," as she was known by most, was born on November 30, 1931 in Dover-Foxcroft, Maine. She is the daughter to Marga... remainder of obit for Patricia L. Hews

Candice Myatt

Candice Myatt

Rochester, NH - Candice E Myatt, 74 of Rochester NH, formerly of Barnstead NH passed away unexpectedly on Wednesday evening at her home. She grew up in Weare NH with her father Carl Hoyt Sr... remainder of obit for Candice Myatt

Darla Jean Welcome

Darla Jean Welcome

Penacook, NH - Darla Jean Welcome died very unexpectantly on Friday April 7, 2024. Darla was predeceased by her husband Dean Welcome and father David Randlett Jr. She is survived by her mot... remainder of obit for Darla Jean Welcome

Softball: Maddy Wachter Ks 12, Concord holds off Winnacunnet in 2023 championship rematch

Softball: Maddy Wachter Ks 12, Concord holds off Winnacunnet in 2023 championship rematch

‘Money is a driver’: Amid inflation and tight labor market, city fighting to stem employee outflow

‘Money is a driver’: Amid inflation and tight labor market, city fighting to stem employee outflow

Hooksett’s new tower could improve coverage in Bow

Hooksett’s new tower could improve coverage in Bow

Rare bipartisan gun bill gets NH Senate hearing

Rare bipartisan gun bill gets NH Senate hearing

Wildfire season is here: Concord at moderate risk; high risk further south

Wildfire season is here: Concord at moderate risk; high risk further south

Court: Communities can’t reject solar on looks, property value fears alone

Court: Communities can’t reject solar on looks, property value fears alone

Mayors say need is growing for state homelessness prevention funds

Mayors say need is growing for state homelessness prevention funds

Opinion: Bankers have the NH Public Deposit Investment Pool in their sights

Opinion: Bankers have the NH Public Deposit Investment Pool in their sights

High schools: Tuesday’s track, baseball, softball, lacrosse and tennis results

High schools: Tuesday’s track, baseball, softball, lacrosse and tennis results

Opinion: Members of NH Jewish community write letter to NH congressional delegation

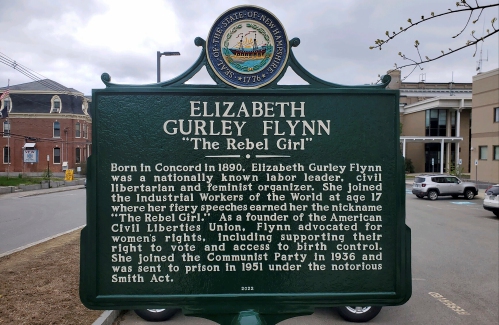

Opinion: Members of NH Jewish community write letter to NH congressional delegation Opinion: Whatever a court decides, Elizabeth Gurley Flynn retains an important place in American labor history

Opinion: Whatever a court decides, Elizabeth Gurley Flynn retains an important place in American labor history Sunapee Kearsarge Intercommunity Theater presents ‘Olympus On My Mind’ in April

Sunapee Kearsarge Intercommunity Theater presents ‘Olympus On My Mind’ in April