‘A little piece of everything I like’: New Pittsfield barbershop brings more than a haircut to downtown

With a 1940s barber pole marking the outside and the fresh smell of a clean shave wafting from the window, the new single-chair barbershop is impossible to miss from downtown Pittsfield.

New Hampshire targets sexual exploitation and human trafficking inside massage parlors

Dark curtains drawn tight, doors locked at all hours, surveillance cameras inside the building and unusual business hours — these are all warning signs that a massage parlor may be a front for something more than therapeutic services.

Most Read

New Hampshire legalizes public alcohol consumption in designated ‘social districts’

New Hampshire legalizes public alcohol consumption in designated ‘social districts’

New Hampshire providers brace for Medicaid changes that reach beyond healthcare

New Hampshire providers brace for Medicaid changes that reach beyond healthcare

Warner town administrator granted restraining order against selectman

Warner town administrator granted restraining order against selectman

Hotel makeover underway in downtown Concord

Hotel makeover underway in downtown Concord

Opinion: Dear Gov. Ayotte, let’s talk about the books

Opinion: Dear Gov. Ayotte, let’s talk about the books

State rules Epsom must pay open-enrollment tuition to other school districts, despite its refraining from the program

State rules Epsom must pay open-enrollment tuition to other school districts, despite its refraining from the program

Editors Picks

A Webster property was sold for unpaid taxes in 2021. Now, the former owner wants his money back

A Webster property was sold for unpaid taxes in 2021. Now, the former owner wants his money back

Report to Readers: Your support helps us produce impactful reporting

Report to Readers: Your support helps us produce impactful reporting

City prepares to clear, clean longstanding encampments in Healy Park

City prepares to clear, clean longstanding encampments in Healy Park

Productive or poisonous? Yearslong clubhouse fight ends with council approval

Productive or poisonous? Yearslong clubhouse fight ends with council approval

Sports

Athlete of the Week: Grace Saysaw, Concord High School

Concord High junior Grace Saysaw cemented herself as one of the Crimson Tide’s best sprinters of the past few years with a record-breaking end to the spring.

Local golfers tee off at 122nd Amateur Championship

Local golfers tee off at 122nd Amateur Championship

Opinion

Opinion: Trumpism in a dying democracy

Opinion: What Coolidge’s century-old decision can teach us today

Opinion: What Coolidge’s century-old decision can teach us today

Opinion: The art of diplomacy

Opinion: The art of diplomacy

Opinion: After Roe: Three years of resistance, care and community

Opinion: After Roe: Three years of resistance, care and community

Opinion: Iran and Gaza: A U.S. foreign policy of barbarism

Opinion: Iran and Gaza: A U.S. foreign policy of barbarism

Your Daily Puzzles

An approachable redesign to a classic. Explore our "hints."

A quick daily flip. Finally, someone cracked the code on digital jigsaw puzzles.

Chess but with chaos: Every day is a unique, wacky board.

Word search but as a strategy game. Clearing the board feels really good.

Align the letters in just the right way to spell a word. And then more words.

Politics

New Hampshire school phone ban could be among strictest in the country

When Gov. Kelly Ayotte called on the state legislature to pass a school phone ban in January, the pivotal question wasn’t whether the widely popular policy would pass but how far it would go.

Sununu decides he won’t run for Senate despite praise from Trump

Sununu decides he won’t run for Senate despite praise from Trump

Arts & Life

Arts in the Park returns for July

Concord Arts Market will host their second monthly Arts in the Park event on Saturday from 10 a.m. to 3 p.m. in Rollins Park.

Hopkinton art gallery showcases “Creativity Beyond Convention”

Hopkinton art gallery showcases “Creativity Beyond Convention”

AROUND CONCORD: Your guide to free summer music

AROUND CONCORD: Your guide to free summer music

Around Concord: Steps to nowhere – but it used to be somewhere

Around Concord: Steps to nowhere – but it used to be somewhere

Around Concord: Refreshing recipes from Table Bakery

Around Concord: Refreshing recipes from Table Bakery

Obituaries

Mary Ann Hermman

Mary Ann Hermman

Baltimore, MD - Mary Ann Hermann died in her home in Baltimore on July 5, 2025, following a battle with cancer. She was 91. Mary Ann was a retired psychiatric nurse who worked in hospitals and visiting patients at their homes in Cuba, ... remainder of obit for Mary Ann Hermman

David Franklin Whitney

David Franklin Whitney

Hillsboro, NH - David F. Whitney 87, a lifelong resident of Hillsboro, NH., joined his bride Patricia A. (Wing) Whitney in Heaven Thursday June 26,2025. Born September 10, 1937 to Harry L. and Harriett S. Whitney at their home on Wh... remainder of obit for David Franklin Whitney

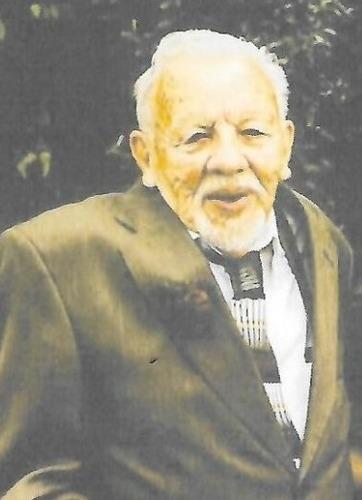

John Allen Fletcher

John Allen Fletcher

Epsom, NH - We are beyond sad to share that our father, John Allen Fletcher is no longer here to share in our daily lives. Born April 28, 1935, he was blessed to have celebrated his 90th birthday in Florida with 4 of his 7 great-grandch... remainder of obit for John Allen Fletcher

Joan Reed

Joan Reed

Joan "Jody" Reed Concord, NH - Joan "Jody" Reed, age 90, of East Side Drive passed away peacefully on Tuesday, July 8th, 2025 at Havenwood. She was born in Newport, NH, the daughter of the late Dr. Raymond Libby and Selma (Swedberg) Lib... remainder of obit for Joan Reed

Youngsters Richardson and Hakala move on, and veterans crash out at 122nd State Amateur Championship

Youngsters Richardson and Hakala move on, and veterans crash out at 122nd State Amateur Championship

Natural disasters boost interest in receiving emergency alerts

Natural disasters boost interest in receiving emergency alerts

Veterans not for profit Swim With A Mission holds annual Paintball, Swimming and Navy SEAL Gold Star Family Tribute Dinner

Veterans not for profit Swim With A Mission holds annual Paintball, Swimming and Navy SEAL Gold Star Family Tribute Dinner

Concord Little League softball team to play in state championship at Martin Field in Concord against Mount Monadnock

Concord Little League softball team to play in state championship at Martin Field in Concord against Mount Monadnock

Town turmoil: Chichester town administrator resigns again

Town turmoil: Chichester town administrator resigns again

Police investigate shooting at Sanbornton home that left one person injured

Police investigate shooting at Sanbornton home that left one person injured

Lavender haze: Purple fields bloom at Warner farm

Lavender haze: Purple fields bloom at Warner farm

As Concord’s Gavin Richardson places second at golf Junior Amateur, young players look ahead to the 122nd State Amateur Championship

As Concord’s Gavin Richardson places second at golf Junior Amateur, young players look ahead to the 122nd State Amateur Championship Sunapee’s Bryce Whitlow keeps memory of above-average MLB players alive through social media page ‘MLB Hall of (Pretty) Good’

Sunapee’s Bryce Whitlow keeps memory of above-average MLB players alive through social media page ‘MLB Hall of (Pretty) Good’ Six local seniors play in CHaD East-West All-Star Football game; Nyhan wins MVP

Six local seniors play in CHaD East-West All-Star Football game; Nyhan wins MVP Concord became a Housing Champion. Now, state lawmakers could eliminate the funding.

Concord became a Housing Champion. Now, state lawmakers could eliminate the funding. ‘A wild accusation’: House votes to nix Child Advocate after Rep. suggests legislative interference

‘A wild accusation’: House votes to nix Child Advocate after Rep. suggests legislative interference  Town elections offer preview of citizenship voting rules being considered nationwide

Town elections offer preview of citizenship voting rules being considered nationwide