Latest News

Spring things: Savoring the small moments

Spring things: Savoring the small moments

Two Weare select board members resign

Two Weare select board members resign

‘I thought we had some more time’ – Coping with the murder-suicide of a young Pembroke mother and son

A half-eaten container of yogurt still sits in the fridge. A “Bluey” bike leans against the back door, and a small watering can rests untouched nearby, each item an aching reminder of the life that filled Bill Byrne’s home in Pembroke less than a week ago.

From New Hampshire to South Texas: Laconia Christian Academy students witness changed immigration system on border trip

Under a different president, the Guatemalan woman and her disabled son would have likely been 100 days into a new life in the United States. Instead, by the time a group of 10 students from Laconia Christian Academy arrived in South Texas late last month, the family was miles south, 100 days into an uncertain future at a makeshift camp in Mexico.

Most Read

To provide temporary shelter, Concord foots the bill for hotel stays for people experiencing homelessness

To provide temporary shelter, Concord foots the bill for hotel stays for people experiencing homelessness

The Appalachian Trail in New Hampshire just got easier, as another debate looms over replacing structures in wilderness areas

The Appalachian Trail in New Hampshire just got easier, as another debate looms over replacing structures in wilderness areas

State Police recover body from Merrimack River in Hooksett

State Police recover body from Merrimack River in Hooksett

Ramp from I-93 to I-89 to be closed for repairs Tuesday

Ramp from I-93 to I-89 to be closed for repairs Tuesday

Authorities believe mother shot three year-year-old son in Pembroke murder-suicide

Authorities believe mother shot three year-year-old son in Pembroke murder-suicide

Granite Geek: There’s a very big battery in Moultonborough. We need a lot more of them

Granite Geek: There’s a very big battery in Moultonborough. We need a lot more of them

Editors Picks

The Monitor’s guide to the New Hampshire legislature

The Monitor’s guide to the New Hampshire legislature

One year after UNH protest, new police body camera footage casts doubt on assault charges against students

One year after UNH protest, new police body camera footage casts doubt on assault charges against students

‘It’s always there’: 50 years after Vietnam War’s end, a Concord veteran recalls his work to honor those who fought

‘It’s always there’: 50 years after Vietnam War’s end, a Concord veteran recalls his work to honor those who fought

‘We honor your death’ – Arranging services for those who die while homeless in Concord

‘We honor your death’ – Arranging services for those who die while homeless in Concord

Sports

High schools: Monday’s softball, baseball, lax, tennis and track results

Merrimack Valley 5, Plymouth 2

High schools: Weekend lacrosse results

High schools: Weekend lacrosse results

High schools: Thursday’s softball, baseball, lax and track results

High schools: Thursday’s softball, baseball, lax and track results

Program offers discounted golf rounds for young players at Concord’s Beaver Meadow

Program offers discounted golf rounds for young players at Concord’s Beaver Meadow

High schools: Thursday’s area baseball, lacrosse, tennis and track results

High schools: Thursday’s area baseball, lacrosse, tennis and track results

Opinion

Opinion: My memories of Vietnam 50 years later

Jean Stimmell, retired stone mason and psychotherapist, lives in Northwood and blogs at jeanstimmell.blogspot.com.

Opinion: Concord officials: Can we sit and talk?

Opinion: Concord officials: Can we sit and talk?

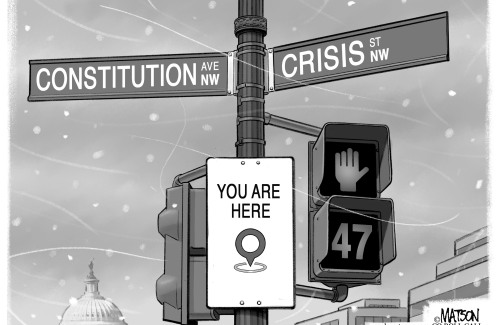

Opinion: Trump versus the U.S. Constitution

Opinion: Trump versus the U.S. Constitution

Opinion: Protect our winters!

Opinion: Protect our winters!

Opinion: That was then. This is now.

Opinion: That was then. This is now.

Your Daily Puzzles

An approachable redesign to a classic. Explore our "hints."

A quick daily flip. Finally, someone cracked the code on digital jigsaw puzzles.

Chess but with chaos: Every day is a unique, wacky board.

Word search but as a strategy game. Clearing the board feels really good.

Align the letters in just the right way to spell a word. And then more words.

Politics

‘A wild accusation’: House votes to nix Child Advocate after Rep. suggests legislative interference

Rosemarie Rung thinks of Elijah Lewis often.

Sununu decides he won’t run for Senate despite praise from Trump

Sununu decides he won’t run for Senate despite praise from Trump

Arts & Life

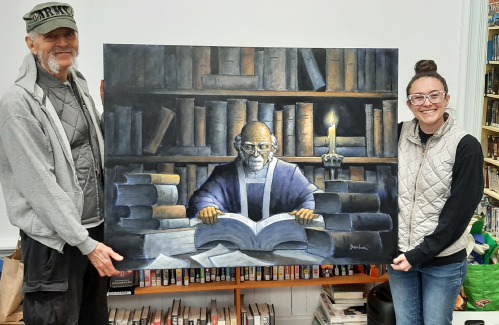

Donating “The Bibliophile”

On April 26, Tom Barber, an Andover resident and a well-known painter and illustrator since the 1970s, presented his painting of "The Bibliophile" to Michaela Hoover, director of the Andover Libraries.

The Last Stand Country Band performing in Allenstown

The Last Stand Country Band performing in Allenstown

Garden Club blossoms in Chichester

Garden Club blossoms in Chichester

Obituaries

George W. Bean

George W. Bean

Concord, NH - A celebration of life for George W. Bean who passed away on February 27th, 2025, will be held on Saturday May 17th, 2025, from 1PM to 5PM at his and Jane's home at 42 Fisherville RD, Concord NH ... remainder of obit for George W. Bean

Sarah Kinter

Sarah Kinter

Canterbury, NH - Surrounded by the home of her dreams in Canterbury, New Hampshire and eager to begin the new journey before her, Sarah Anne Kinter left this life on Thursday, May 8, 2025, to start joyously "partying upstairs", after ei... remainder of obit for Sarah Kinter

Janet R. Boisvin

Janet R. Boisvin

Concord, NH - Janet R. Boisvin, 81, died peacefully at her home on Thursday, May 08,2025 after a period of failing health. The daughter of the late Elwin and Violet Jenkins, Janet was born on August 28,1943 in Concord. She was a gr... remainder of obit for Janet R. Boisvin

Barbara Jean Clark

Barbara Jean Clark

Concord, NH - Barbara Jean Clark, age 85, of Concord, passed away at home on Wednesday, April 30, 2025. She was the loving wife of the late David L. Clark. Born in Portsmouth on February 24, 1940, Barbara was the daughter of the late R... remainder of obit for Barbara Jean Clark

Beautify Allenstown hosting community cleanup day

Beautify Allenstown hosting community cleanup day

Baseball: Syvertson suits up for CCA in narrow win over Franklin

Baseball: Syvertson suits up for CCA in narrow win over Franklin

Plans for lights at Concord’s Keach Park back on

Plans for lights at Concord’s Keach Park back on

Town elections offer preview of citizenship voting rules being considered nationwide

Town elections offer preview of citizenship voting rules being considered nationwide Medical aid in dying, education funding, transgender issues: What to look for in the State House this week

Medical aid in dying, education funding, transgender issues: What to look for in the State House this week On the Trail: Shaheen’s retirement sparks a competitive NH Senate race

On the Trail: Shaheen’s retirement sparks a competitive NH Senate race Brookford Farm’s annual heifer parade celebrates family, sustainability, organic farming

Brookford Farm’s annual heifer parade celebrates family, sustainability, organic farming Pembroke City Limits brings yoga, book club, line dancing, and more to Suncook Village

Pembroke City Limits brings yoga, book club, line dancing, and more to Suncook Village