New Hampshire committee seeks to prevent domestic fatalities like murder-suicide in Berlin

Marisol Fuentes needed help.

Kearsarge Food Hub turns 10, celebrates new era of food security work

Kathleen Bigford regarded Bradford, like she did most of New Hampshire, as a greying place.

Most Read

Surplus seller Ollie’s enters New Hampshire, opens in Belmont

Surplus seller Ollie’s enters New Hampshire, opens in Belmont

Riverbend to close adult mental health housing facility in Concord due to funding challenges

Riverbend to close adult mental health housing facility in Concord due to funding challenges

Massachusetts man faces DWI charges after crash on I-93 in Hooksett

Massachusetts man faces DWI charges after crash on I-93 in Hooksett

City officials reject the work of an outside consultant hired to lead Concord’s diversity initiatives

City officials reject the work of an outside consultant hired to lead Concord’s diversity initiatives

Man who wandered away from Mt. Washington summit found dead after long search

Man who wandered away from Mt. Washington summit found dead after long search

Editors Picks

A Webster property was sold for unpaid taxes in 2021. Now, the former owner wants his money back

A Webster property was sold for unpaid taxes in 2021. Now, the former owner wants his money back

Report to Readers: Your support helps us produce impactful reporting

Report to Readers: Your support helps us produce impactful reporting

City prepares to clear, clean longstanding encampments in Healy Park

City prepares to clear, clean longstanding encampments in Healy Park

Productive or poisonous? Yearslong clubhouse fight ends with council approval

Productive or poisonous? Yearslong clubhouse fight ends with council approval

Sports

Bow Brook Tennis Club hosts men’s A and B tournaments

The Bow Brook Club has a long history in Concord. Every year, except during breaks during both world wars, it has hosted a citywide men’s tennis tournament on red clay.

Concord National LL Softball wins State Championship and moves on to Little League Regional Tournament

Concord National LL Softball wins State Championship and moves on to Little League Regional Tournament

Josiah Hakala of Beaver Meadow wins State Amateur golf championship

Josiah Hakala of Beaver Meadow wins State Amateur golf championship

Youngsters Richardson and Hakala move on, and veterans crash out at 122nd State Amateur Championship

Youngsters Richardson and Hakala move on, and veterans crash out at 122nd State Amateur Championship

Athlete of the Week: Grace Saysaw, Concord High School

Athlete of the Week: Grace Saysaw, Concord High School

Opinion

Opinion: Trumpism in a dying democracy

Opinion: What Coolidge’s century-old decision can teach us today

Opinion: What Coolidge’s century-old decision can teach us today

Opinion: The art of diplomacy

Opinion: The art of diplomacy

Opinion: After Roe: Three years of resistance, care and community

Opinion: After Roe: Three years of resistance, care and community

Opinion: Iran and Gaza: A U.S. foreign policy of barbarism

Opinion: Iran and Gaza: A U.S. foreign policy of barbarism

Your Daily Puzzles

An approachable redesign to a classic. Explore our "hints."

A quick daily flip. Finally, someone cracked the code on digital jigsaw puzzles.

Chess but with chaos: Every day is a unique, wacky board.

Word search but as a strategy game. Clearing the board feels really good.

Align the letters in just the right way to spell a word. And then more words.

Politics

New Hampshire school phone ban could be among strictest in the country

When Gov. Kelly Ayotte called on the state legislature to pass a school phone ban in January, the pivotal question wasn’t whether the widely popular policy would pass but how far it would go.

Sununu decides he won’t run for Senate despite praise from Trump

Sununu decides he won’t run for Senate despite praise from Trump

Arts & Life

Community Players of Concord celebrate 97th season, prepare for 98th

To celebrate the conclusion of their 97th season, the Community Players of Concord came together for an annual meeting including a pot-luck dinner and the presentation of a number of theatrical awards.

Henniker Blues, Brews & BBQ Fest returns for fourth year

Henniker Blues, Brews & BBQ Fest returns for fourth year

Artist Spotlight: Holly Emrick

Artist Spotlight: Holly Emrick

Obituaries

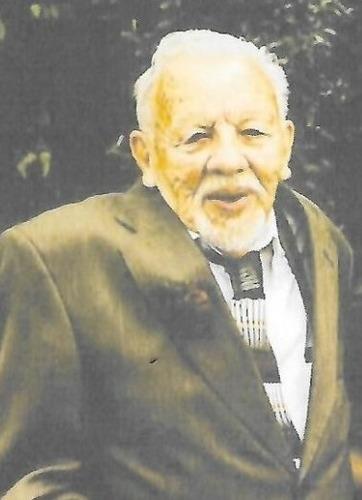

David Franklin Whitney

David Franklin Whitney

Hillsboro, NH - David F. Whitney 87, a lifelong resident of Hillsboro, NH., joined his bride Patricia A. (Wing) Whitney in Heaven Thursday June 26,2025. Born September 10, 1937 to Harry L. and Harriett S. Whitney at their home on Wh... remainder of obit for David Franklin Whitney

Myrna Prevost

Myrna Prevost

Concord, NH - Myrna Braley Prevost, 88, died on July 11 at The Birches in Concord, New Hampshire. She was predeceased by her beloved husband of 62 years, Fernand Prevost. Myrna was born August 20, 1936, in Franklin, New Hampshire. The ... remainder of obit for Myrna Prevost

Annette Lamoreau Wolfe

Annette Lamoreau Wolfe

Concord , NH - ANNETTE LAMOREAU WOLFE Passed away surrounded by family on -5/9/2025 at the age of 88 yrs old. She was born in Presque Isle, Maine, the daughter of the late Paul Lamoreau and Ruth (Hasey) Lamoreau. Annette is survived by ... remainder of obit for Annette Lamoreau Wolfe

D. Jacqueline Lavigne

D. Jacqueline Lavigne

Tilton, NH - Dorothy Jacqueline "Jackie" Lavigne, a native of Nashua NH and a longtime prior resident of Tilton, NH and of Prescott Valley, AZ passed away on July 3, 2025 at the age of 92, with her devoted children by her bedside. A... remainder of obit for D. Jacqueline Lavigne

‘No one tells you how to be an adult’: Concord father-son duo debuts disability and adulthood film on PBS

‘No one tells you how to be an adult’: Concord father-son duo debuts disability and adulthood film on PBS

High school lacrosse, tennis All-State rosters announced

High school lacrosse, tennis All-State rosters announced

NHTI student competed in Miss Collegiate USA pageant: ‘A platform of kindness’

NHTI student competed in Miss Collegiate USA pageant: ‘A platform of kindness’

‘Friends-a-Palooza’ invites Concord community to practice kindness and outreach at Keach Park

‘Friends-a-Palooza’ invites Concord community to practice kindness and outreach at Keach Park

Affordable townhouse expansion gets green light

Affordable townhouse expansion gets green light

Concord became a Housing Champion. Now, state lawmakers could eliminate the funding.

Concord became a Housing Champion. Now, state lawmakers could eliminate the funding. ‘A wild accusation’: House votes to nix Child Advocate after Rep. suggests legislative interference

‘A wild accusation’: House votes to nix Child Advocate after Rep. suggests legislative interference  Town elections offer preview of citizenship voting rules being considered nationwide

Town elections offer preview of citizenship voting rules being considered nationwide Concert on the lawn coming to Pierce Manse

Concert on the lawn coming to Pierce Manse Nate Lavallee, July Young Professional of the Month: Stretching toward strength, self-care and Southern NH wellness

Nate Lavallee, July Young Professional of the Month: Stretching toward strength, self-care and Southern NH wellness